Driving AI Visibility in Search with Smart LLM Optimization

Over 2.5 billion prompts are sent to ChatGPT every single day now. That number indicates people no longer just “Google it,” but instead ask AI, receive instant answers, and move on. This change presents a significant challenge for your brand, as your content might rank well on Google but still be ignored by LLMs like ChatGPT, Gemini, and Perplexity.

That means all the time and effort you put into SEO could end up invisible to the tools your audience now uses. If you’ve noticed fewer leads, weaker traffic, or less engagement lately, it might not be your site design or ads. It’s more likely that your content is optimized for search bots, while your audience is interacting with AI.

At ThunderClap, we’ve rebuilt over 129 B2B websites for SaaS, fintech, and enterprise tech clients, including Amazon, Storylane, Factors, Deductive AI, and Razorpay. More so, we build sites that convert and are recognized by AI.

In this guide, you’ll discover our smart LLM optimization framework (SLOF), a clear, five‑step system to help your content appear inside generative answers where your audience now lives.

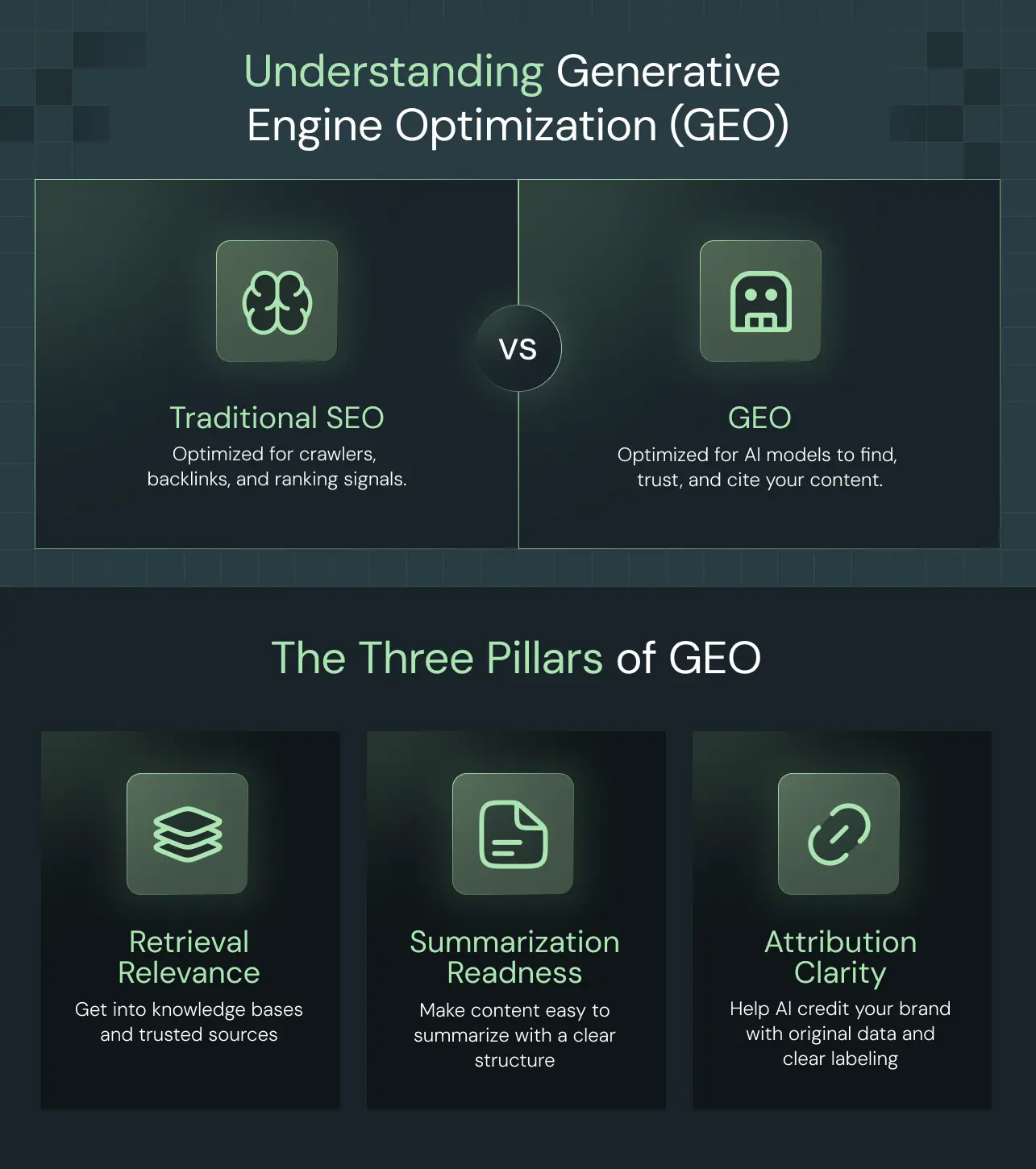

What is generative engine optimization (GEO)?

Before we explore examples you can apply, let’s define what generative engine optimization (GEO) is and how it differs from traditional SEO. GEO is the process of optimizing your content so that large language models can find, trust, and cite it in their generated answers. You design with AI in mind, not just traditional search indexers.

In traditional SEO, you optimize content for crawlers, backlinks, and ranking signals from search engines. But those tactics don’t reliably translate into AI visibility. With GEO, you must think about how models retrieve, summarize, and attribute content.

A new research paper indicates that AI search systems exhibit a systematic and overwhelming bias towards earned media (third-party, authoritative sources) over brand-owned and social content, which contrasts starkly with Google's more balanced approach. The study also shows that different AI search systems don’t all work the same way. They vary a lot in how many different sources they use, how up-to-date their info is, how well they handle other languages, and the importance of phrasing.

To succeed with GEO, you must master three modern pillars of AI search.

{{specficBlog}}

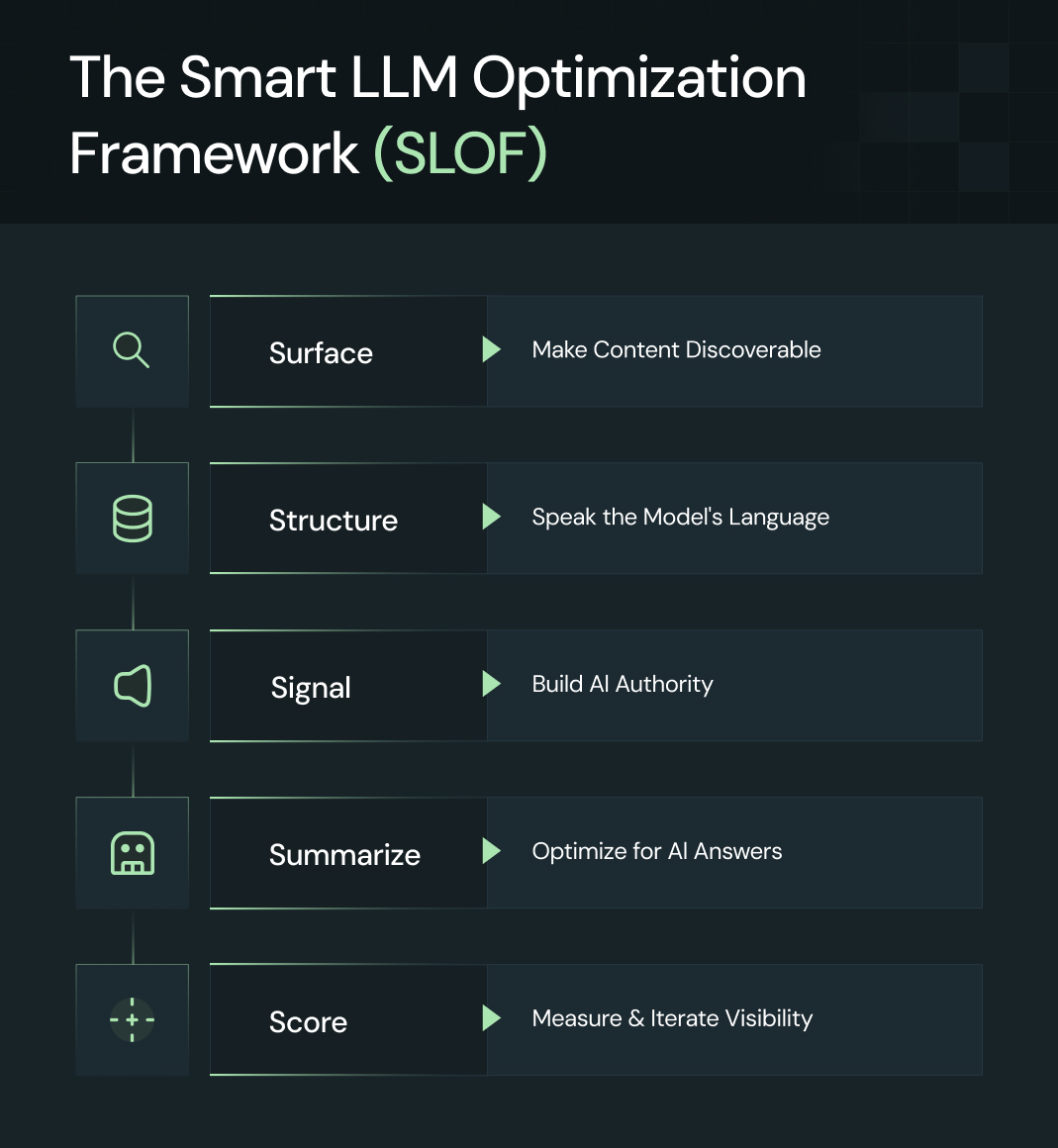

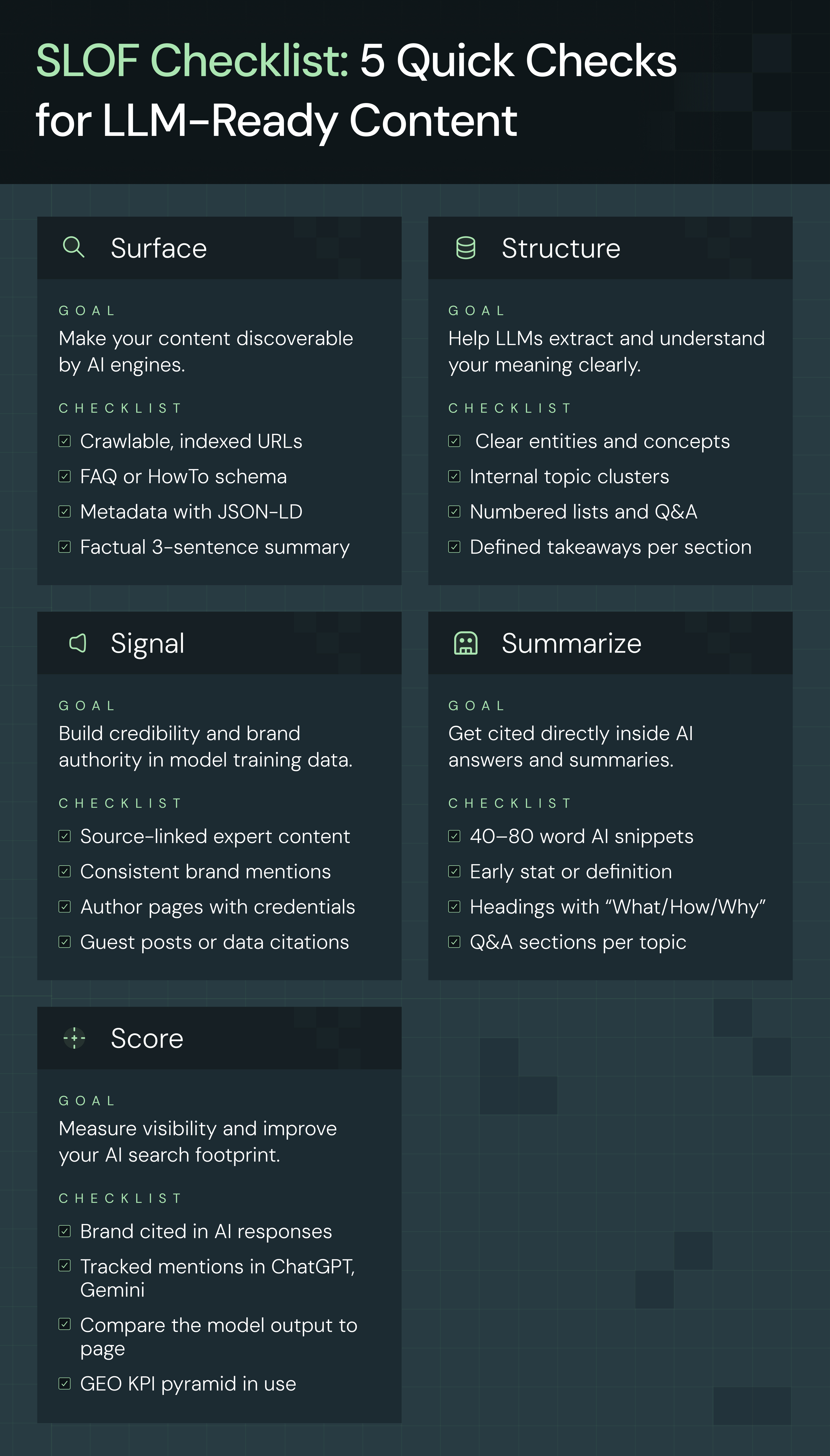

The smart LLM optimization framework (SLOF)

With AI changing the game, your content must appear within the AI-generated answers your audience is reading. That’s where our smart LLM optimization framework, or SLOF, comes in.

We built this five-stage LLM optimization framework (SLOF) to help you get your brand noticed by AI and make sure your content gets the credit it deserves. Here’s a quick snapshot of how SLOF works from start to finish:

Now let’s discuss each stage in detail.

SLOF stage #1: Surface: Make content discoverable

Your first job is to make your content retrievable by generative engines. If an LLM can’t reach or index your page, none of the other stages matter. You want every URL you care about to be visible to tools like ChatGPT browsing, Perplexity, or Bing Copilot.

Start with a website audit. Verify whether existing pages are accessible to AI tools. Use Screaming Frog or a similar crawler to simulate how AI bots might view your site. Identify URLs blocked by robots.txt, inaccessible by crawlers, or missing standard indexing tags.

Next, add schema markup to key pages. You can use FAQ, HowTo, Product, or Article schema types to give structured clarity. Those schemas help models understand the purpose, structure, and relationships in your content. Pair schema with JSON‑LD and OpenGraph metadata. These contextual tags inform the model about the page's content, author, date, and intended use.

Here’s your to-do for Surface:

- All key URLs are crawlable by AI tools

- Proper meta tags and OpenGraph / JSON‑LD metadata present

- FAQ or HowTo schema added where relevant

- A 150‑word factual summary embedded on important pages

SLOF stage #2: Structure: Speak the model’s language

Once your content is discoverable, the next step is to make it understandable. Think of this as training LLMs to read your site fluently. A model doesn’t “see” your design or colors, but it understands relationships, entities, and clarity. Your job is to make that structure crystal clear.

Start by writing in semantically rich and unambiguous sentences. Use full company names, product names, definitions of key terms, and precise attributes. Avoid pronouns or vague references that an LLM might misinterpret. Every concept deserves its own precise definition inside a paragraph.

Next, build topic clusters. Internal links between related pages help models recognize which subjects you own. If your blog covers AI strategy, connect articles about machine learning, automation, and data analytics under that hub. This tells LLMs that you are an authority within a structured knowledge domain.

At ThunderClap, we call this the E³ method:

- Explicit: Be clear in the statement

- Entity‑linked: Use named entities and definitions

- Easily summarized: Keep each section compact, with clean headings

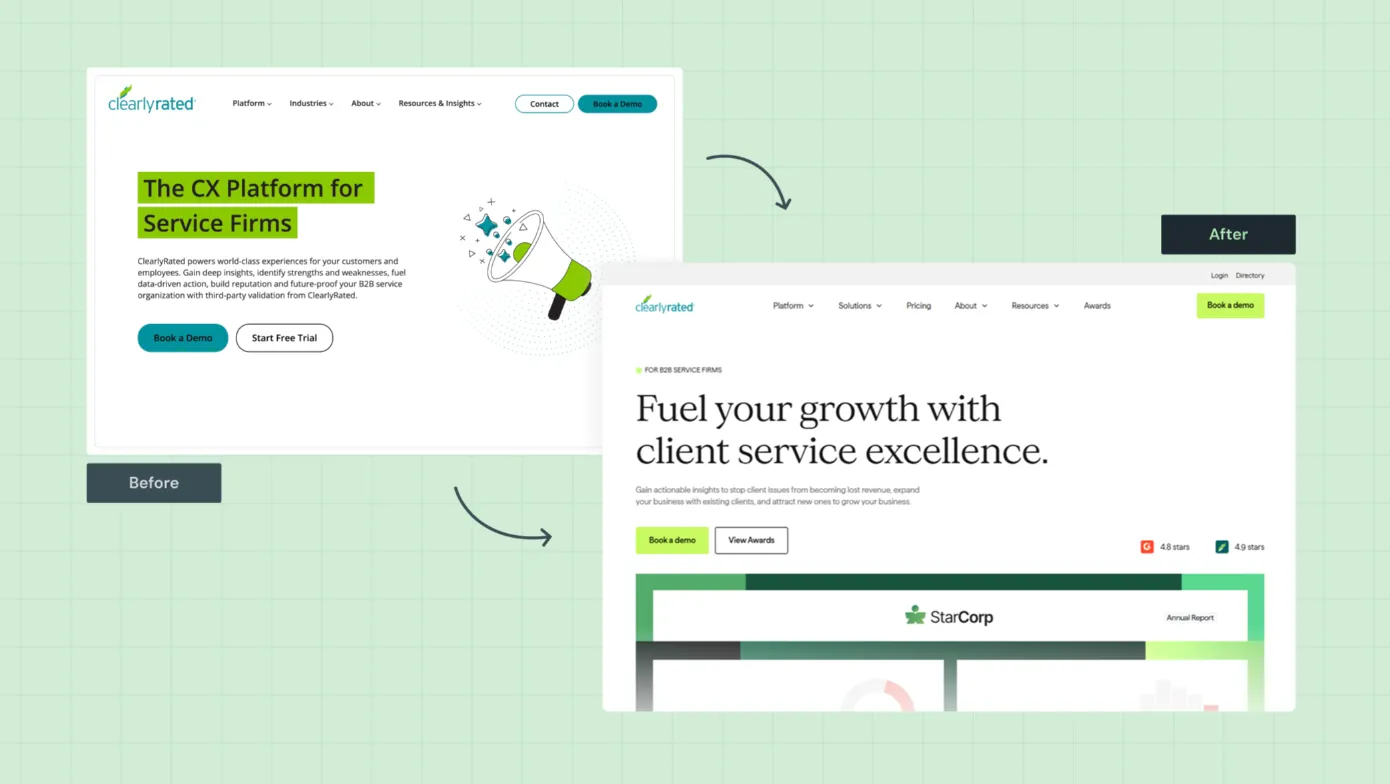

A recent client, ClearlyRated, asked us to help with a website redesign. But instead of jumping into colors and layouts, we worked with their sales, product, and marketing teams to reframe the structure of their messaging.

We helped them clarify what matters most to their customers and how their solutions should be explained. This involved restructuring pages with clear entity definitions, concise explanations, and logical flows. As a result, we created a B2B web design that works as hard as their inbound engine, structured not only for humans but also for LLMs.

SLOF stage #3: Signal: Build AI‑recognizable authority

Now that your content is structured clearly, it’s time to teach models why they should trust it. In AI-driven search, credibility is based on statistical data. Models rely on patterns of authority, consistency, and transparency to decide which brands to quote. That’s where our Signal strategy comes in.

That starts with consistency across your content ecosystem. Publish fact-based, citation-rich pages that are easy to extract from and rich with source signals. Get your name referenced on other trusted platforms, whether through guest articles, industry reports, or collaborative content. Create detailed author pages with real names, bios, and a trail of bylined contributions.

Internally, keep your language tight. Use the same terminology, entity structure, and phrasing throughout your site and beyond. LLMs are trained to associate repeated words and phrases with expertise, so internal consistency helps them categorize you correctly.

{{specficService}}

We use the A-C-T model for signal creation:

- A: Authority (backlinks, citations)

- C: Consistency (language, entities, branding)

- T: Transparency (authorship, verifiable data)

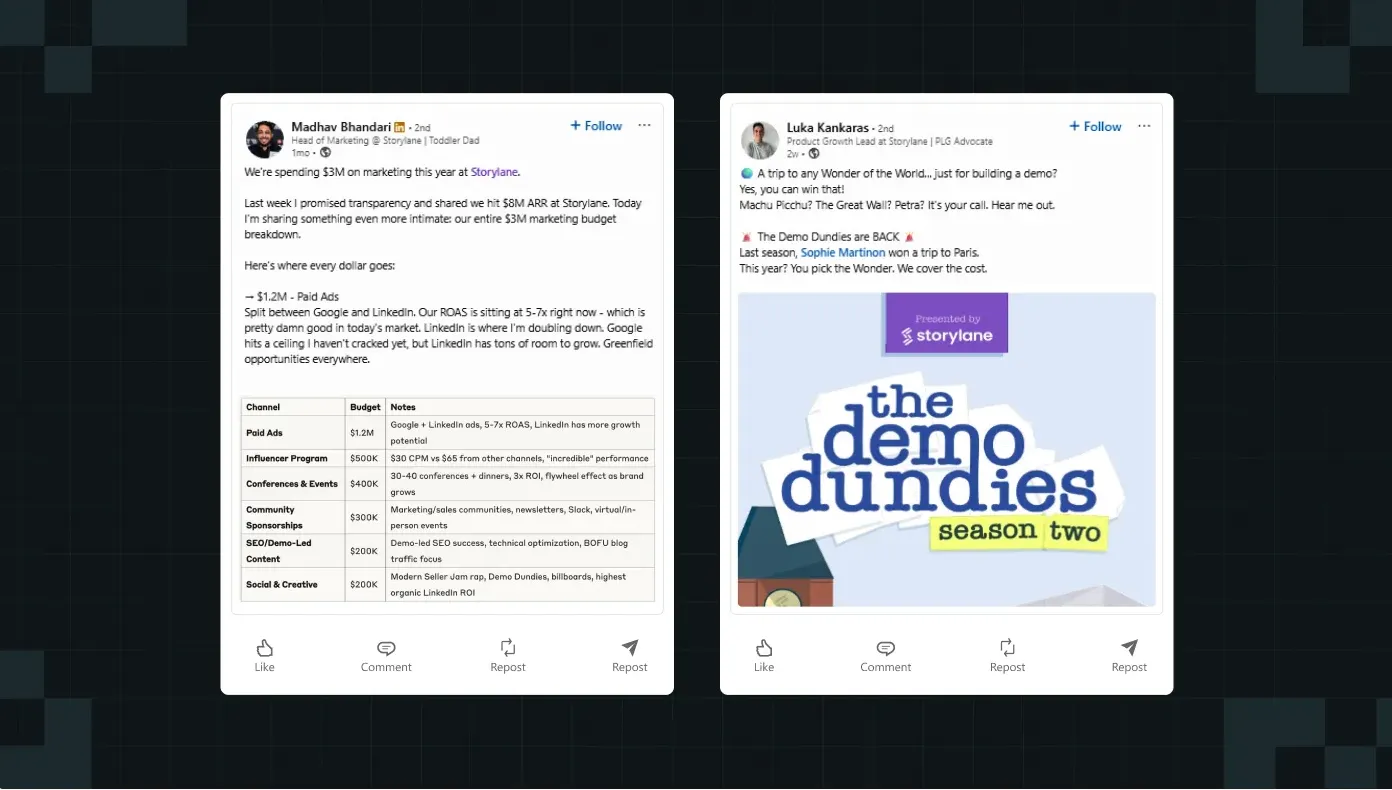

A strong example is Storylane. Their team doesn’t just rely on one channel. Across LinkedIn, they regularly post product updates, customer results, and behind-the-scenes insights. These employee-led efforts strengthen how AI engines associate their brand with interactive demo creation.

And their recent website revamp with us shows what happens when design principles and structured messaging come together across touchpoints.

Check out the full breakdown in this LinkedIn post by Kiran Kulkarni, Partner and Head of Growth at ThunderClap, where she shares the detailed design process and before-and-after results.

When you treat external and internal publishing as a unified B2B website optimization strategy, you provide models with a reliable, trust-rich signal. That’s how you go from being readable to being referenced.

SLOF stage #4: Summarize: Optimize for AI answers

At this point, your content is visible, structured, and credible. Now, you want to make it quotable. Large language models pull short, self-contained snippets that directly answer user questions. So, your goal is to give them exactly that.

Craft “AI‑ready snippets” of 40–80 words that directly answer common user queries. Put those early in your content, so LLMs see them first. In those snippets, include key definitions, stats, or steps.

Design dedicated Q&A sections for each page using headings like “What is … ?”, “How does … work?”, or “Why does … matter?” Those patterns trigger generative engines to pick up those blocks. Use numbered steps or lists within the text for clarity.

Use the S.A.I.D. format in mini sections:

- State: Pose the question

- Answer: Answer directly

- Illustrate: Show a quick example

- Document: Cite a source or link

Each mini-section provides the model with an atomic, self-contained answer that it can reuse. If you do this well, your content becomes prime fodder for AI citations.

When readers or AI see you clearly answering questions with a clean structure, you raise your chances of selection in generative outputs.

Also read: Best Conversion Rate Optimization Companies in the US in 2025

SLOF stage #5: Score: Measure and iterate visibility

Finally, every smart framework needs measurement. Once you’ve applied the first four stages, you must track where and how often your brand appears within generative search results. This last step turns experimentation into long-term performance.

Monitor how often your brand or content is cited in Perplexity, ChatGPT, Gemini, or Copilot. Use tools like BrightEdge AI Catalyst or its generative visibility features to monitor your online presence across AI search engines. Some brands already use BrightEdge to track AI presence in real time. Maintain a manual “LLM citation log” where you record citations, model sources, and query contexts every week.

Next, evaluate three core metrics:

- Exposure: How often does your brand appear in AI-generated answers?

- Attribution: Are your URLs or company names being cited correctly?

- Trust: Do the AI outputs accurately reflect your published content?

We call this the GEO KPI pyramid: Exposure → Attribution → Trust → Traffic

Use those signals to iterate. If you see low attribution, focus more on Stage 3 (Signal). If you appear but rarely get traffic, optimize summaries and link pathways further.

Also read: SaaS Website Design That Converts: 7 Must-Have Elements to Win More Signups

Common pitfalls and optimization mistakes to avoid

Even with the best intentions, brands often encounter avoidable issues that keep them invisible to generative engines. Here are five of the most common mistakes we see, and how you can fix them with our expertise:

- Over-optimizing for keywords: Stuffing paragraphs with exact-match terms might fool basic crawlers, but it just confuses large language models. LLMs want clean, clear writing with a natural tone, complete thoughts, and context that leaves no doubt.

- Ignoring structured data: If your site lacks schema markup, JSON-LD, or OpenGraph data, models can’t correctly retrieve or categorize your content. That’s why we always include full structured data implementation during every website design audit, as it directly impacts generative discoverability.

- Forgetting author transparency: LLMs struggle to trust content that lacks a real person behind it. No author bios or credentials? You lose credibility and ranking potential. We address this by integrating custom author sections into every B2B website we optimize, enabling AI to link the topic to the human expert.

- No factual backing: Pages full of vague claims rarely get summarized or cited by LLMs. Adding stats, dates, and original data isn’t optional, but necessary for appearing in AI-generated answers. For example, when we revamped roommaster’s website, making the content fact-dense and easy to reference was a top priority, which in turn boosted trust with AI models.

Using unclear brand naming: If your brand name changes across pages or sounds too generic, AI models can’t link your insights back to you. To avoid this, keep your phrasing consistent, strengthen your expertise across every touchpoint, and double-check every key page against the E³ checklist: Explicit, Entity-linked, Easily summarized.

The future of AI search optimization

Search is evolving fast, and what worked a year ago is already falling short inside generative engines. With large language models like ChatGPT, Gemini, and Perplexity moving toward real-time retrieval using RAG (retrieval-augmented generation), we’re stepping into a new era.

What’s next is something bigger than GEO optimization. We call it AIO, or AI information optimization. Instead of writing just for rankings, teams will create content designed for structured understanding, trust-building, and real-time AI recall.

Here’s what that future looks like:

- Real-time retrieval: Generative engines will source live facts, stats, and examples, not just cached web pages.

- Structured data wins: Pages built with schema, summaries, and clear metadata will surface faster and more often.

- Verifiable sources matter: Brands that link to original data, cite expert authors, and publish factual content gain long-term visibility.

- Trust signals compound: LLMs prefer domains they have seen before with consistent terminology and strong credibility indicators.

Brands that apply this shift early will earn model-level authority. That means your content starts showing up by default within answers, summaries, and voice-based results across platforms.

Also read: 20 Proven SaaS Conversion Optimization Hacks to Boost Your Sales

Quick checklist: Apply the SLOF framework to every page

You’ve just walked through all five stages of the smart LLM optimization framework. But knowing what to do is one thing. Actually putting it into action, across dozens of live pages, is where most teams get stuck.

That’s why we created this simple SLOF checklist. It’s the same framework we use to drive visibility for B2B brands.

And if you want the full details, here’s the step-by-step breakdown of each element:

Surface

Audit URLs for AI indexing and browsing

- Add schema markup (FAQ, HowTo, Product, Article)

- Use JSON-LD and OpenGraph metadata

- Write concise 3-sentence factual summaries

- Ensure proper meta tags and crawlable URLs

Structure

- Write clear, semantic sentences with full names and definitions

- Organize content using topic clusters and internal links

- Use numbered lists, tables, and Q&A formats

- Apply the E³ method: Explicit, Entity-linked, Easily summarized

Signal

- Publish fact-rich, source-linked content regularly

- Gain backlinks and external citations with consistent branding

- Create author pages with verifiable credentials

- Follow the A-C-T model: Authority, Consistency, Transparency

Summarize

- Write AI-ready snippets of 40-80 words answering user queries

- Highlight key stats, definitions, and steps early

- Add dedicated Q&A sections with semantic headings

- Use the S.A.I.D. format: State, Answer, Illustrate, Document

Score

- Track AI citations on ChatGPT, Perplexity, Gemini, and Copilot

- Use BrightEdge Generative Visibility or manual citation logs

- Monitor mentions, attribution, accuracy, and AI-driven traffic

- Follow the GEO KPI pyramid: Exposure, Attribution, Trust, Traffic

Most B2B web design agencies are still uncertain about AI visibility, but ThunderClap uses SLOF to deliver tangible pipeline impact.

The next visibility battle starts with the right website strategy

Ranking on Google no longer guarantees attention. If large language models like ChatGPT or Perplexity can't find or trust your content, your brand is likely to be skipped. That’s why LLM optimization is no longer optional. It gives you a clear advantage in how people discover and engage with your expertise.

The SLOF framework makes this shift practical. It helps you write, structure, and present content in a way that models can read, summarize, and cite with confidence. At The ThunderClap, we use the same system when building high-conversion websites for fast-growing SaaS, fintech, and AI companies.

Here’s what sets us apart:

- Proven AI and SaaS expertise → We’ve revamped 140+ B2B websites and turned them into conversion engines.

- Practical, conversion-focused approach → We don’t just tweak content, we create conversion architectures that get your brand featured in AI-generated answers and drive measurable results.

- Scalable, hands-on execution → From Webflow builds marketers can own to playbooks your team can iterate on, we build for growth and ease of use.

Plenty of agencies can help you get clicks. We help your brand get seen and trusted in the AI era.

{{ctaBlock}}

FAQS

1. What is LLM optimization in the context of search?

LLM Optimization is the process of shaping your content so that large language models (LLMs), such as ChatGPT, Gemini, or Perplexity, can accurately interpret and reuse it when generating answers. This means using clean, factual language, clearly defining entities, and formatting pages to resemble structured datasets (with headings, tables, FAQs, and a logical flow). Essentially, generative engine optimization (GEO optimization) is SEO for models, not crawlers: you’re making your content the easiest for an LLM to read, trust, and cite.

2. Why is LLM optimization important for SEO today?

Because search behavior has shifted, people now ask AI assistants instead of using search engines. When users query ChatGPT or Gemini, the AI generates a single synthesized answer, often pulling from sources it finds most readable and credible. If your site isn’t optimized for this new environment through LLM SEO optimization techniques like clear context blocks, factual accuracy, and consistent entity naming, you simply won’t appear in those answers.

In 2025, generative engine optimization isn’t optional; it’s how brands earn visibility inside the AI summaries that users increasingly trust over traditional search results.

3. Is there a way to measure the effectiveness of LLM optimization?

Yes, and while traditional rankings don’t exist for AI engines, there are emerging ways to gauge GEO optimization success. Start by manually checking whether your content is cited or referenced in tools like ChatGPT (browsing mode), Gemini, or Perplexity. Even a mention signals that your structure is model-friendly.

You can also monitor AI-driven referral traffic or branded mentions in generative responses, using platforms like BrightEdge’s Generative Visibility or Authoritas GEO dashboards. True LLM SEO optimization performance shows up when models start pulling your phrasing, stats, or examples, proof that your content has become embedded in the AI’s trusted knowledge layer.

4. How does LLM optimization differ from traditional SEO?

While traditional SEO is about ranking on search engines, LLM optimization is about being cited by AI systems. In SEO, you target keywords and backlinks, but in Generative Engine Optimization (GEO), you focus on factual clarity, semantic richness, and structured logic. Instead of optimizing for click-throughs, you’re optimizing for interpretability, making sure models understand what you mean and attribute it correctly.

In short, LLM SEO optimization doesn’t replace classic SEO, but it extends it. You still need visibility on Google, but you also need to make sure AI assistants can read, reason about, and reuse your content accurately.

.webp)

Browse Similar Articles

Interested in seeing what we can do for your website?

.webp)

.svg)

.png)